Leveraging Technology for a Full Picture

Leveraging technology to build a full picture sets the stage for a detailed exploration of how various technological tools and strategies can be utilized to create a comprehensive understanding of complex issues. This involves integrating diverse data sources, harnessing AI, and fostering human-technology collaboration to uncover hidden insights and patterns. We’ll delve into the practical application of these methods, examining successful case studies and considering ethical implications along the way.

This comprehensive guide will provide a detailed roadmap for achieving a holistic understanding of any subject, from business strategy to scientific discovery, by leveraging the power of technology. We’ll Artikel the process, from defining the scope of the “full picture” to communicating findings effectively.

Defining the Scope of “Full Picture”

The quest for a “full picture” is a common aspiration in many fields, from business strategy to scientific research. Leveraging technology offers powerful tools to move beyond fragmented data points and synthesize a comprehensive understanding. This necessitates a clear definition of what constitutes a “full picture” in this context, which goes beyond simply collecting more data.A “full picture” isn’t merely a collection of disparate facts, but a coherent and interconnected understanding of a system, process, or phenomenon.

It demands a synthesis of information from various sources, acknowledging diverse perspectives, and considering the evolving context over time. A partial picture, in contrast, offers a limited view, potentially leading to flawed interpretations and ineffective strategies. Technology can be a bridge, connecting the scattered pieces of information into a holistic and actionable insight.

Defining a Complete Picture

A complete picture is a comprehensive and interconnected understanding of a subject, integrating data, perspectives, and timeframes to produce a robust and nuanced representation. It differs from a partial picture, which focuses on specific aspects, potentially omitting crucial elements and leading to misinterpretations. Technology plays a vital role in bridging this gap by enabling the integration of diverse data sources, perspectives, and historical context.

Dimensions of a Full Picture

A full picture encompasses several key dimensions:

- Data Sources: A full picture relies on a diverse range of data sources, including internal databases, external APIs, public datasets, and social media feeds. The diversity of these sources ensures a broader spectrum of perspectives and minimizes bias.

- Perspectives: Recognizing the limitations of a single viewpoint is crucial. A complete picture must consider multiple perspectives, including those of stakeholders, competitors, and the general public. For example, analyzing customer feedback alongside sales data can provide a more holistic understanding of consumer behavior.

- Timeframes: Understanding the evolution of a situation is critical. A full picture incorporates historical data, real-time information, and projected trends. For instance, analyzing historical sales data alongside current market trends can provide insights into future sales projections.

Data Categorization and Organization

A structured framework is essential for managing the vast amount of data involved in creating a full picture. This framework should allow for easy access, analysis, and interpretation of data points.

- Data Classification: Data points should be classified into relevant categories. For instance, customer data could be categorized by demographics, purchase history, and interaction with customer support. This structured approach facilitates analysis and allows for the identification of patterns and relationships.

- Data Linking: Establishing connections between different data points is vital. For example, linking customer demographics to purchase history reveals insights into purchasing preferences. This process allows for a richer understanding of the interconnectedness within the data.

- Data Visualization: Visual representations of data can provide a clear and concise overview of complex relationships. For example, a heatmap illustrating sales trends across different regions can highlight areas of high and low performance, facilitating targeted strategies.

Example Framework

A practical framework for organizing data could include the following table:

| Data Point | Category | Source | Timeframe |

|---|---|---|---|

| Customer Age | Demographics | Internal CRM | 2023-2024 |

| Purchase History | Customer Behavior | Sales Database | 2020-Present |

| Customer Support Interactions | Customer Interaction | Support Ticketing System | 2022-Present |

This structured approach allows for efficient data retrieval and analysis, enabling a more comprehensive understanding of the data’s interconnectedness.

Data Integration and Synthesis

Building a comprehensive understanding, or “full picture,” often requires combining information from various sources. This necessitates sophisticated data integration techniques to combine diverse data types and formats. Effective data integration is critical for accurate analysis and insightful decision-making. The key is not just gathering data, but synthesizing it into a coherent whole.Data integration is the process of combining data from different sources into a single, unified view.

This process is crucial for creating a comprehensive understanding of a particular subject or issue. Successful integration hinges on several factors, including the choice of appropriate technologies, careful consideration of data quality, and a methodical approach to data cleaning and transformation.

Methods for Integrating Diverse Data Sources

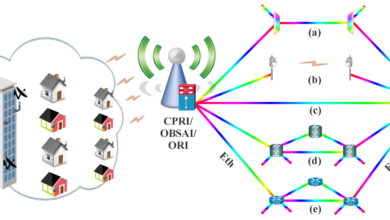

Different technologies are used to integrate data from various sources. Database management systems (DBMS) play a vital role in organizing and managing the combined data. Data warehousing solutions are often employed for storing and retrieving large volumes of integrated data. Application Programming Interfaces (APIs) allow seamless communication between different systems and data sources. Data integration tools automate the process of combining data, reducing manual effort and minimizing errors.

Using tech to get a complete understanding is crucial, and a great starting point is exploring the fundamentals. It’s all about building a solid base, like the foundational steps in learning to code, and that’s where a concept like “Hello world!” comes in handy. Mastering these basic building blocks, like the initial “Hello world!” program in programming, allows for a more thorough and robust approach to leveraging technology for a full picture.

This foundational knowledge will pave the way for more complex projects and ultimately, a deeper understanding of the subject. Hello world! is a key part of that journey.

Cloud-based platforms provide scalable and cost-effective solutions for integrating and managing diverse data sets.

Data Types and Integration

Data comes in various forms, each requiring specific methods for integration. Structured data, like that found in relational databases, is organized in a predefined format, making it relatively easy to integrate. Unstructured data, such as text documents or social media posts, is less organized. Specialized techniques, like natural language processing (NLP) and machine learning, are needed to extract meaningful information from this type of data and integrate it with structured data.

Semi-structured data, such as XML or JSON, falls between structured and unstructured data, possessing some structure but lacking the rigid organization of relational databases. Integration methods for semi-structured data often leverage parsing techniques to convert the data into a usable format for analysis.

Importance of Data Quality and Consistency

Data quality and consistency are paramount for accurate analysis. Inconsistent data can lead to inaccurate conclusions and misleading insights. Data quality issues can stem from errors in data entry, inconsistencies in data formats, or discrepancies between different data sources. Data validation techniques and processes can be used to identify and address these inconsistencies. Regular data quality checks and audits ensure that the integrated data is reliable and trustworthy.

Data Cleaning and Transformation

Data cleaning and transformation are essential steps in the integration process. Data cleaning involves identifying and correcting errors, inconsistencies, and missing values in the data. Transformation involves converting data from one format to another or performing calculations to prepare it for analysis. Data normalization, a critical transformation step, ensures data consistency and reduces redundancy. Standardization of data formats and units across different data sources is crucial for accurate analysis.

The choice of cleaning and transformation methods depends heavily on the specific data sources and the desired outcomes of the analysis.

Technology Tools and Platforms: Leveraging Technology To Build A Full Picture

Building a complete picture requires a robust technological foundation. Choosing the right tools and platforms is crucial for efficiently gathering, analyzing, and visualizing data. This section delves into various options, highlighting their strengths, weaknesses, and suitability for different types of complex information. The key is to select tools that not only facilitate data integration but also empower insightful analysis and clear communication of results.

Data Gathering Tools

Data collection is the first step. Different tools excel in different contexts. Spreadsheet software, such as Microsoft Excel or Google Sheets, provides a foundational platform for basic data entry and manipulation. Databases, like MySQL or PostgreSQL, offer structured storage and querying capabilities for larger datasets, particularly when relational connections between data points are important. Specialized data scraping tools are essential for extracting data from websites and APIs.

Tools like Scrapy or Beautiful Soup allow for automated data extraction, streamlining the process for large-scale projects. Web analytics platforms (e.g., Google Analytics) are critical for understanding user behavior and website performance. Each tool has its strengths and weaknesses. Spreadsheets are excellent for small-scale projects, while databases are vital for large, complex datasets.

Data Analysis Platforms

Data analysis platforms play a critical role in transforming raw data into actionable insights. Statistical software packages, such as R or Python with libraries like Pandas and Scikit-learn, enable advanced statistical modeling and machine learning algorithms. These tools are powerful for uncovering patterns, trends, and correlations within the data. Cloud-based platforms like AWS SageMaker or Google Cloud AI Platform provide scalable computing resources for handling large datasets and complex models.

The choice of platform depends on the complexity of the analysis and the volume of data being processed. While R excels in statistical modeling, Python is a versatile language, supporting a broader range of data analysis tasks.

Data Visualization Platforms

Effective visualization is crucial for conveying complex information clearly. Tableau and Power BI are popular platforms for creating interactive dashboards and visualizations. These tools allow users to explore data in various ways, revealing hidden trends and patterns. For specific scientific data, specialized visualization libraries in Python, like Matplotlib and Seaborn, provide high-level control and customization. Choosing the right visualization platform depends on the target audience, the nature of the data, and the desired level of interactivity.

For instance, Tableau is strong in interactive dashboards for business audiences, while Python libraries offer more flexibility for customized representations of scientific data. Consider the level of interactivity needed; static charts might suffice for some situations, while dynamic dashboards are crucial for real-time monitoring.

Critical Technical Considerations

Choosing the appropriate technology requires careful consideration of several technical aspects. Data compatibility is paramount. Ensure that the tools selected can handle the various data formats involved. Scalability is another important factor. The chosen tools should be able to handle increasing data volumes as the project evolves.

Integration with existing systems is also crucial for seamless data flow. Finally, consider the skills and expertise of the team. Choose tools that align with the team’s existing knowledge and capabilities. Consider the long-term maintenance and support needs of the chosen technology stack.

Data compatibility, scalability, integration, and team expertise are critical factors in selecting the right technology.

Leveraging AI and Machine Learning

Unlocking the true potential of data requires sophisticated tools, and AI and machine learning (ML) are critical for extracting actionable insights from complex datasets. These technologies allow us to move beyond simple correlations to uncover deeper, causal relationships and predict future trends. By integrating AI and ML, we can transform raw data into a comprehensive understanding of the “full picture,” enabling more informed decision-making.

AI for Insight Extraction

AI algorithms excel at identifying patterns and relationships within data that might be invisible to human observation. Machine learning models, particularly those based on deep learning, can analyze vast amounts of structured and unstructured data, extracting meaningful insights. This includes identifying anomalies, classifying information, and forecasting future outcomes. For instance, an AI model trained on sales data might identify a correlation between specific marketing campaigns and increased customer acquisition, revealing previously unknown patterns.

Predictive Modeling for Forecasting and Trend Identification

Predictive modeling is a powerful application of AI for forecasting and trend identification. By training models on historical data, AI can predict future outcomes with varying degrees of accuracy. For example, in the retail industry, predictive models can forecast demand for specific products, allowing companies to optimize inventory levels and reduce waste. Another example is using weather data to predict crop yields, allowing farmers to plan accordingly and potentially mitigate risks.

These predictions are not just theoretical; they’re based on statistical analysis and algorithms that learn from past data.

AI for Pattern and Relationship Identification in Complex Datasets

AI algorithms can identify complex patterns and relationships within large, diverse datasets, often transcending human capabilities. Consider a financial institution analyzing transaction data to identify fraudulent activity. AI algorithms can sift through millions of transactions, identifying unusual patterns that might indicate fraudulent behavior. This ability to detect subtle anomalies and relationships within complex data is critical in many fields, including healthcare, finance, and manufacturing.

AI-Driven Full Picture Flowchart

┌────────────┐

│ Data Input │

└────┬──────┘

│

▼

┌────────────┐

│ Data Cleaning│

└────┬──────┘

│

▼

┌────────────┐

│ Feature Engineering │

└────┬──────┘

│

▼

┌────────────┐

│ AI Model Training│

└────┬──────┘

│

▼

┌────────────┐

│ Model Evaluation│

└────┬──────┘

│

▼

┌────────────┐

│ Insight Extraction│

└────┬──────┘

│

▼

┌────────────┐

│ Actionable Insights│

└────────────┘

This flowchart Artikels the process of leveraging AI to create a comprehensive understanding of the “full picture.” The process starts with data input and proceeds through stages of cleaning, feature engineering, model training, evaluation, and finally, the extraction of actionable insights.

Each step is crucial for ensuring the quality and accuracy of the final insights.

Human-Technology Collaboration

The digital age has ushered in an era of unprecedented data availability. While technology excels at processing vast datasets and uncovering intricate patterns, the crucial element of human interpretation often gets overlooked. Humans bring context, critical thinking, and nuanced understanding that machines lack. Effective data utilization hinges on a symbiotic relationship between human expertise and technological prowess.

A balanced approach ensures that insights are not just generated but also meaningfully applied.

The Importance of Human Input in Interpreting Insights

Technology excels at identifying correlations and trends, but often struggles with the “why” behind them. Human input is indispensable in interpreting these insights within the broader context of a specific situation. Consider a predictive model identifying a potential sales decline in a particular region. Without human analysis of factors like economic downturns, competitor actions, or local events, the insight remains isolated and potentially misleading.

A human analyst can contextualize the data, offering a more accurate interpretation and driving actionable strategies.

Examples of Human Interaction with Technology for Refining Insights

Humans can interact with technology in various ways to refine and validate insights. A common method is interactive data visualization. A user can manipulate charts and graphs, filtering data and exploring different angles to gain a deeper understanding of the trends. Another example is employing human expertise in data quality assurance. Humans can review and validate the data ingested by the system, correcting errors and identifying inconsistencies that technology might miss.

The Role of Human Expertise in Contextual Interpretation

Human expertise is critical in understanding data within its specific context. An expert in marketing, for example, can interpret sales data within the framework of consumer behavior, market trends, and competitor strategies. Their understanding of the interplay between these factors allows for a more accurate assessment of the sales decline and a more tailored approach to mitigating it.

A Workflow for Human-Technology Collaboration

A well-structured workflow facilitates the collaboration between humans and technology. This workflow should include these key steps:

- Data Ingestion and Preprocessing: Technology automates the collection and initial cleaning of data. This frees human analysts to focus on quality assurance and contextual understanding.

- Technology-Driven Insight Generation: Sophisticated algorithms and machine learning models identify patterns and correlations in the data, generating potential insights. This stage focuses on generating possible explanations for the patterns, and is primarily done by the technology.

- Human Interpretation and Validation: Experts scrutinize the insights generated by technology, examining the context and the underlying reasons for the patterns. They evaluate the plausibility of the insights and seek corroborating evidence.

- Refinement and Actionable Insights: Human analysts refine the insights by adding contextual knowledge and identifying potential actions based on their findings. They translate insights into actionable strategies, focusing on the “what to do next” aspect.

- Feedback Loop: The workflow should incorporate a feedback loop. The insights and actions taken by humans can be fed back into the technology to improve the algorithms and predictive models, ensuring continuous improvement.

Visualization and Communication

Transforming raw data into actionable insights hinges on effective visualization and communication. A compelling narrative woven from visualized data can resonate with diverse audiences, fostering understanding and driving informed decisions. Whether you’re presenting findings to investors, explaining trends to colleagues, or simply exploring patterns yourself, the right visualization tools and techniques are crucial. Clear and engaging visualizations empower stakeholders to grasp complex information quickly and retain key takeaways.

Visualizing Complex Data

Different visualization methods are suited to various types of data. Choosing the appropriate method is essential for effectively communicating insights. A well-designed visualization should immediately convey the core message, minimizing the need for lengthy explanations.

| Visualization Type | Description | Example Data |

|---|---|---|

| Bar Charts | Compare quantities across categories. Excellent for showing differences in values. | Sales figures for different product lines over time. |

| Line Graphs | Display trends and changes over time. Useful for showing continuous data. | Stock prices fluctuating throughout the year. |

| Scatter Plots | Show the relationship between two variables. Identify correlations or patterns. | Customer demographics (age vs. spending habits). |

| Heatmaps | Represent data as colors, highlighting areas with high or low values. | Sales performance by region and product. |

| Pie Charts | Show proportions of a whole. Effective for displaying percentages. | Market share distribution for various companies. |

| Maps | Visualize data geographically. Excellent for identifying regional patterns. | Distribution of disease outbreaks across a city. |

Effectiveness of Visualization Techniques

The effectiveness of a visualization hinges on its clarity and ability to communicate the core message without ambiguity. For instance, a cluttered bar chart with too many categories can be confusing and lose its impact. Conversely, a well-structured line graph can clearly illustrate a trend over time. Color palettes, axis labels, and legend clarity are all crucial elements for a meaningful visual representation.

Choosing the right visualization type, and appropriately labeling elements, is key.

Communicating Findings to Diverse Audiences

Effective communication goes beyond the visualization itself. Understanding the audience’s background and knowledge level is critical. Tailoring the narrative to resonate with their needs and expectations is paramount. For instance, a presentation to technical experts might require more detailed data points and complex charts, while a presentation to a broader audience would benefit from simplified visuals and a concise narrative.

The use of clear, concise language and concise explanations is essential.

Crafting a Compelling Narrative

A compelling narrative around data goes beyond simply displaying figures. It involves framing the data within a story that connects the findings to a broader context. This narrative should highlight the significance of the data, explaining why it matters and what conclusions can be drawn. Identifying key takeaways and using concise language, as well as using storytelling techniques, enhances the message’s impact and engagement.

This narrative should be clear and concise, allowing the audience to easily understand the findings and their implications.

Ethical Considerations and Limitations

Building a “full picture” through technology requires careful consideration of ethical implications. The sheer volume and complexity of data involved necessitate a proactive approach to bias, privacy, transparency, and accountability. Neglecting these factors can lead to inaccurate or unfair outcomes, undermining the very value of the integrated information.

Leveraging technology to create a holistic understanding must prioritize responsible data handling. This means understanding the potential pitfalls and actively working to mitigate them. A robust ethical framework is essential to ensure the accuracy, fairness, and trustworthiness of the conclusions drawn from the data.

Potential Biases in Data

Data sets often reflect existing societal biases, which can be inadvertently amplified by technological analysis. For instance, if a dataset used for predicting loan eligibility is predominantly populated with data from a specific demographic group, the model might learn to favor that group over others, potentially perpetuating existing inequalities.

To mitigate this, diverse and representative datasets are crucial. Regular audits of data sources are essential to identify and address potential biases. Machine learning algorithms themselves should be evaluated for their inherent biases. Techniques like fairness-aware machine learning can be used to develop models that are less susceptible to biased outcomes.

Data Privacy and Security Concerns

Protecting sensitive information is paramount. Data breaches and misuse of private data can have severe consequences for individuals and organizations. Robust security protocols and data encryption are vital to safeguard sensitive information.

Strict adherence to data privacy regulations like GDPR or CCPA is mandatory. Organizations must implement transparent data handling policies and procedures, ensuring compliance with applicable laws. Data anonymization and pseudonymization techniques can help minimize risks without compromising the integrity of the analysis.

Transparency and Accountability in Data Analysis

Transparency in the data analysis process is essential for building trust and enabling accountability. Clear documentation of data sources, methodologies, and assumptions used in the analysis is vital. This allows stakeholders to understand the rationale behind the conclusions and identify potential weaknesses or biases.

Establishing clear lines of accountability for the data analysis process is crucial. Individuals or teams responsible for collecting, processing, and interpreting the data should be held accountable for the quality and integrity of their work. Mechanisms for independent verification and audit of the analysis process are necessary to ensure transparency and accuracy.

Checklist for Ethical Data Handling

- Data Source Validation: Verify the accuracy, completeness, and representativeness of data sources. Assess for potential biases and inconsistencies.

- Data Anonymization/Pseudonymization: Implement appropriate techniques to protect sensitive information, complying with relevant regulations.

- Bias Mitigation Strategies: Employ methods like fairness-aware machine learning and diverse datasets to reduce the risk of biased outcomes.

- Transparency and Documentation: Maintain detailed records of data sources, analysis methodologies, and assumptions. Document the rationale behind conclusions.

- Security Protocols: Implement robust security measures to protect data from unauthorized access, breaches, and misuse.

- Compliance with Regulations: Ensure adherence to relevant data privacy regulations (e.g., GDPR, CCPA).

- Regular Audits: Conduct periodic audits of data sources, analysis methodologies, and outcomes to identify and address potential issues.

Case Studies and Examples

Building a comprehensive understanding often requires piecing together diverse information. This necessitates a meticulous approach to data collection, integration, and analysis. Successful implementations of these strategies often rely on innovative technological solutions. This section will delve into real-world case studies showcasing how technology empowers us to build a complete picture.

A Successful Case Study: Predicting Customer Churn in the Telecom Industry

This case study focuses on a telecom company aiming to reduce customer churn. By leveraging a variety of data sources, including call logs, billing information, customer service interactions, and social media activity, the company developed a predictive model. The model was built using machine learning algorithms, enabling the company to identify customers at high risk of churn.

Challenges Faced and Overcoming Them, Leveraging technology to build a full picture

The primary challenge was integrating data from disparate sources, each with its own format and structure. This involved significant data cleaning and transformation efforts. Furthermore, ensuring the model’s accuracy and reliability required rigorous testing and validation. The company overcame these obstacles by employing a robust data integration platform that standardized data formats and a cloud-based machine learning platform that allowed for rapid model training and deployment.

Key Lessons Learned

The project underscored the importance of a comprehensive data strategy. Effective data integration and the careful selection of appropriate machine learning algorithms are crucial for successful implementation. Furthermore, ongoing monitoring and refinement of the predictive model are essential for maintaining accuracy and relevance.

Specific Technologies Used

- Data Integration Platform: A cloud-based platform specializing in ETL (Extract, Transform, Load) processes, allowing the company to combine various data sources into a unified dataset. This platform handled the transformation of different data formats, standardizing data structures, and removing inconsistencies. A specific example is the use of Apache Kafka for real-time data ingestion and Apache Spark for data processing and transformation.

- Machine Learning Platform: A cloud-based platform that offered pre-built machine learning algorithms and tools to build, train, and deploy models. This platform provided tools for model monitoring, evaluation, and retraining, crucial for adapting to changing market conditions and ensuring model accuracy.

- Data Visualization Tools: Interactive dashboards and reporting tools were used to visualize model performance, customer behavior patterns, and churn risk factors. These tools provided a clear picture of the model’s accuracy and allowed for targeted interventions. An example of this would be Tableau, a widely-used data visualization platform.

Final Wrap-Up

In conclusion, leveraging technology to build a full picture is a multifaceted process requiring careful consideration of data integration, AI application, and human-technology collaboration. By understanding the ethical considerations and limitations, and by applying the strategies Artikeld in this guide, we can unlock powerful insights and make more informed decisions. Ultimately, the goal is to move beyond surface-level observations to a deeper, more complete understanding of any subject matter.